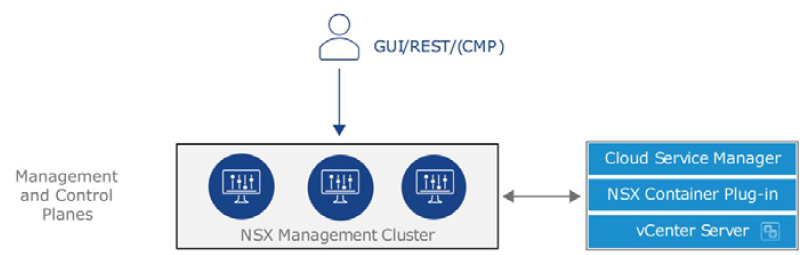

In a previous blog post, NSX-T architecture explained and now we can start implementation of NSX-T. Deployment process of NSX-T Data Center beings with deployment of NSX-T Management cluster. In NSX-T 3.0 management cluster is consist of three NSX-T managers which include both management and control plane. The management plane provides Web UI, REST API and also interface to other management platforms like vCenter Server, vCloud Director or vRealize Automation. The Control plane is responsible for computing and distributing network run time state.

NSX-T managers can be deployed on ESXi or KVM hypervisor. If you are planning to use ESXi platform to host NSX-T managers, an OVA file should be used. On the other hand for KVM platform, a QCOW2 image will be used for NSX-T manager deployment. It is important to note that mixed deployments of managers on both ESXi and KVM are not supported. Based on type of deployment and size of environment, NSX-T manager node size configuration should be selected. Following is the four different configuration options and their requirements.

Continue reading “Deploying NSX-T Management Cluster”